Small Model LLM Cost Efficiency Score: The 90% Operational Reduction Strategy for 2026

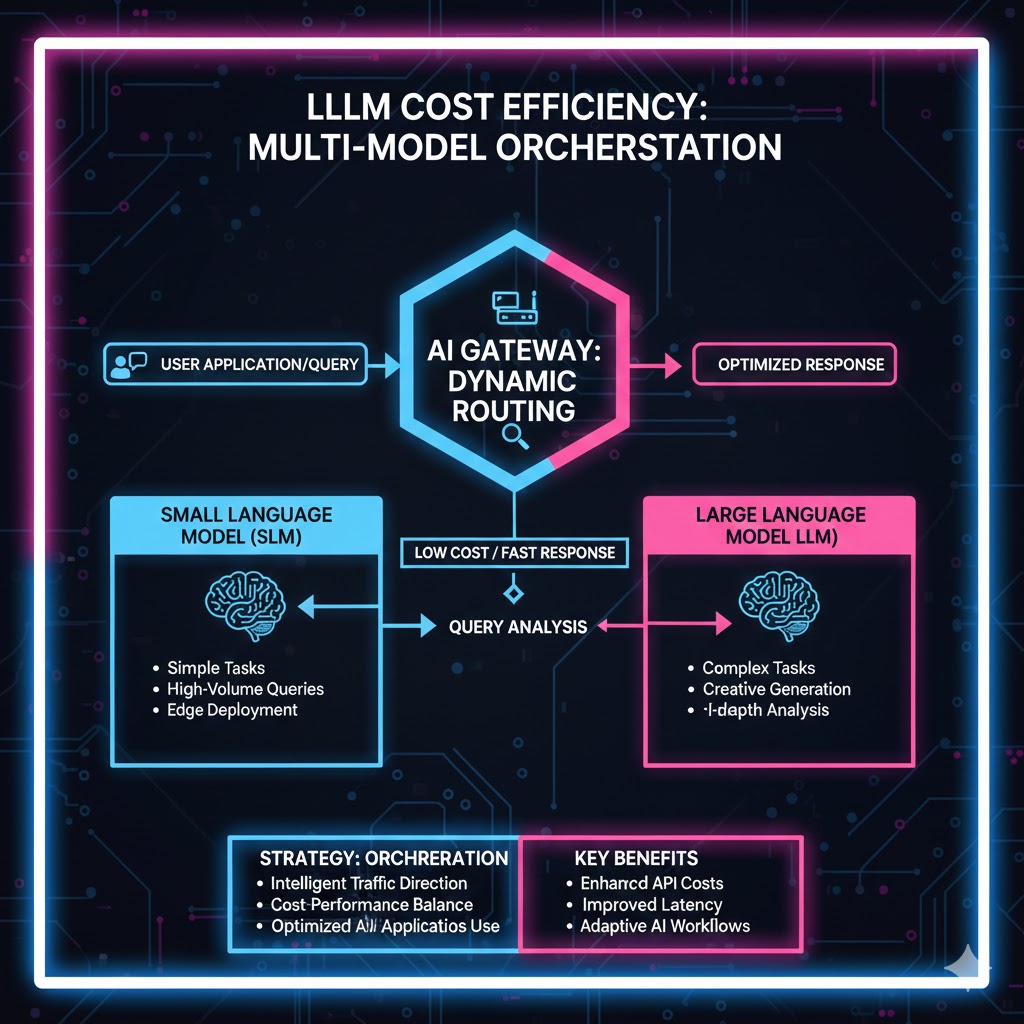

1. The LLM Cost Bottleneck: Why Smaller is the Only Sustainable Choice The Token Trap: Understanding Per-Request Expenditure The primary financial drain in LLM deployment is the Token Spend. LLMs charge based on the number of tokens (words, punctuation, or spaces) processed for both input (the prompt/context) and output (the response). Using a premium model … Read more