The Infrastructure Dilemma: Optimizing Cost and Scale for Modern Machine Learning

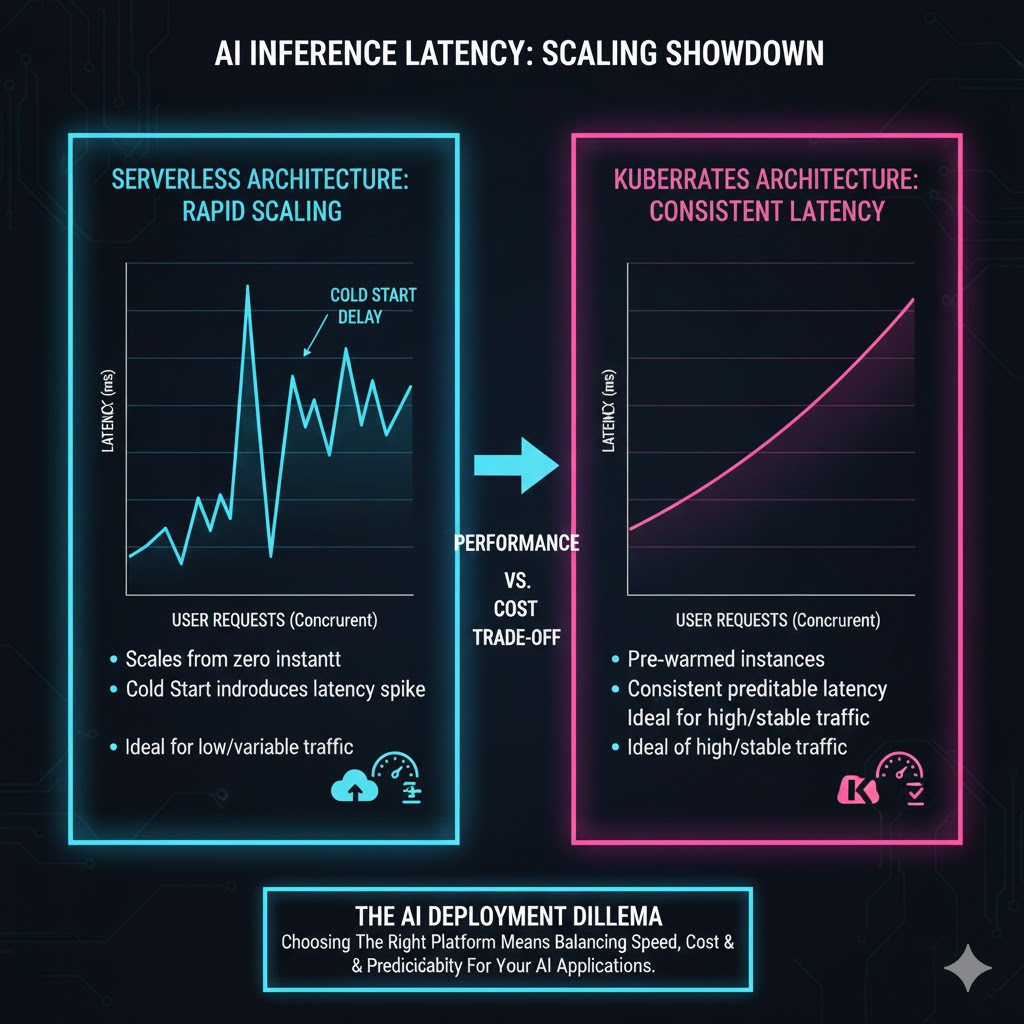

The shift from monolithic applications to microservices has given rise to two dominant paradigms for modern application deployment: Serverless Architecture (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) and Containerization managed by orchestrators like Kubernetes (K8s).

While both offer flexibility and scalability, the choice between them for high-demand, high-variability AI Workloads—such as real-time inference, batch prediction, and model training—profoundly impacts Total Cost of Ownership (TCO). This analysis breaks down the economic and operational trade-offs, focusing specifically on AI’s unique requirements: cost of idle time, cold start latency, and GPU resource management.

1. Architectural Principles and AI Suitability

The fundamental difference lies in resource provisioning and how idle time is handled, which directly impacts TCO for AI.

1.1 Serverless: Pay-per-Execution and Cold Start Penalty

Serverless functions manage the underlying infrastructure entirely, charging only when code is actively executing.

-

Pros for AI: Excellent for low-volume, intermittent inference endpoints (e.g., serving a single prediction API call). Costs scale down directly to zero during idle periods, significantly reducing TCO when demand is unpredictable.

-

Cons for AI: The “cold start” problem remains a critical drawback for low-latency AI inference. When a function is invoked after a period of inactivity, the environment must be initialized, which can take several seconds—a prohibitive delay for real-time applications. Furthermore, Serverless functions are typically restricted in execution time and memory/GPU limits, making them unsuitable for long-running model training (Training Workloads).

1.2 Containerization (Kubernetes): Resource Reservation and Predictability

Kubernetes allows granular control over hardware, defining dedicated resources (CPU/RAM/GPU) via Pods and Deployments.

-

Pros for AI: Ideal for high-volume, continuous inference and large-scale model training. By reserving resources, Kubernetes guarantees consistent, low-latency performance and can efficiently utilize specialized hardware like multi-GPU nodes.

-

Cons for AI: Cost continues to accrue even when the service is idle (the cost of reserved compute), potentially skyrocketing TCO during non-peak hours. Management complexity is significantly higher, requiring specialized DevOps/MLOps talent to maintain the control plane and ensure robust scaling logic. Successfully managing the deployment of complex AI models is paramount regardless of architecture. Learn more about deployment stability: MLOps and Deployment: Ensuring Stable AI in Production Environments and Avoiding Drift

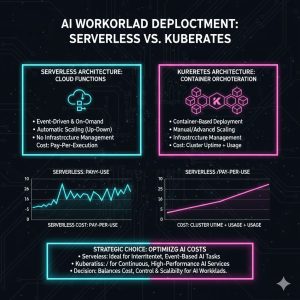

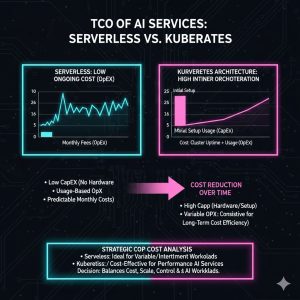

2. TCO Analysis: Operational Cost and Management Overhead

TCO is not just compute cost; it includes human capital, maintenance, and the opportunity cost of architecture lock-in.

2.1 The Cost of Idle Time (Compute Focus)

This is the most critical TCO differentiator for AI inference services:

-

Serverless: TCO is optimized for sparse workloads. If your AI service receives 10,000 requests per day but is idle 90% of the time, Serverless minimizes costs by charging only for the seconds the inference model is loaded and running.

-

Kubernetes: TCO is optimized for dense, continuous workloads. If the service is running 24/7 at high utilization, Kubernetes (using reserved capacity) offers a lower per-request cost than Serverless’s higher per-second rate. However, unused reserved capacity is pure waste.

2.2 Management and Human Capital Cost (Operational Focus)

Kubernetes requires a highly specialized team for setup, monitoring, scaling logic, and troubleshooting the control plane, increasing operational overhead (OpEx). Serverless abstracts away this overhead, freeing up engineering talent to focus on core AI development, although debugging issues within the vendor’s black box can sometimes be challenging.

2.3 Vendor Lock-in and Portability

Serverless (Lambda/Functions) creates a high degree of vendor lock-in. While simplifying operations, switching cloud providers requires a complete rewrite of infrastructure code. Kubernetes, being open-source, offers excellent portability across clouds (EKS, AKS, GKE) or on-premise data centers, mitigating long-term lock-in risk—a key strategic factor for large enterprises.

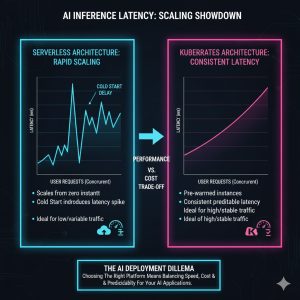

3. Performance, Latency, and Workload Suitability

The optimal architecture is determined by the specific AI task being performed.

3.1 Real-Time Inference (Low Latency Critical)

For services requiring sub-100ms response times (e.g., self-driving car components, recommendation engines):

-

Kubernetes is generally superior. Its ability to keep models permanently loaded (“warm”) on dedicated hardware eliminates cold starts and offers reliable, consistent latency.

-

Serverless is viable only if “provisioned concurrency” (a feature that keeps instances warm and incurs a constant cost) is used, which negates the primary cost advantage of Serverless.

3.2 Batch Processing and Asynchronous Tasks

For non-urgent, high-throughput tasks (e.g., nightly ETL, large-scale data pre-processing for training):

-

Serverless offers a strong advantage here. Functions can be triggered by queues or file uploads, automatically scaling up massively to process data in parallel and then immediately scaling down to zero, offering a superior TCO profile than maintaining a dedicated K8s cluster for burst usage. This architectural choice reflects a larger trend in service organization. Compare these approaches with fundamental architectural decisions: Microservice Architecture vs. Monolith: When to Choose Which for Your AI Deployment

4. Final Verdict: TCO Depends on Utilization Profile

The TCO winner is highly dependent on the utilization profile of the AI workload:

-

Choose Serverless if: Your workload is intermittent, low-volume, latency requirements are not strict (300ms+ acceptable), and minimizing operational overhead is the priority. Best for initial proofs-of-concept and small internal tools.

-

Choose Kubernetes if: Your workload is high-volume, continuous, requires strict low latency (sub-100ms), requires custom GPU/hardware configurations, and vendor portability is a long-term strategic requirement. Best for core business-critical services and intensive training.

REALUSESCORE.COM Analysis Scores

| Evaluation Metric | Serverless (e.g., Lambda) | Containerization (Kubernetes) | Rationale |

| Cost of Idle (Low is Best) | 10.0 | 4.0 | Scales to zero, eliminating unused compute cost. |

| Operational Overhead (Low is Best) | 9.5 | 6.0 | Infrastructure is fully managed by the vendor. |

| Low-Latency Inference | 5.5 | 9.0 | Cold starts are prohibitive; Kubernetes ensures warm models. |

| Model Training Suitability | 3.0 | 9.5 | Superior GPU/resource control and long execution times. |

| TCO for Intermittent Workload | 9.0 | 6.5 | TCO heavily favors Serverless for sporadic use cases. |

| REALUSESCORE.COM FINAL SCORE | 8.0 / 10 | 8.5 / 10 | Kubernetes offers better control and predictability, critical for production AI systems, despite higher OpEx. |