Navigating the Legal Landscape: Generative AI and the Future of Platforms

The rapid evolution of Generative AI—from sophisticated text models to advanced deepfake generators—has forced governments in the U.S. and the E.U. to accelerate regulatory responses.1 For our audience of influential American professionals and platform operators, understanding these new laws is not merely a legal exercise; it is essential for future-proofing business models and mitigating existential risk. This analysis focuses on the emerging regulatory frameworks in both jurisdictions and their specific impact on content creation platforms and AI developers.

Architectural Deep Dive: Two Competing Regulatory Philosophies

While both the U.S. and E.U. aim to foster innovation, their legal approaches to The Regulation of Generative AI are fundamentally different, focusing on risk versus compliance.

1. The European Union: The AI Act’s Risk-Based Approach2

The E.U.’s AI Act is the world’s first comprehensive regulatory framework for AI, adopting a strict, risk-based classification:3

-

Unacceptable Risk: Bans systems that manipulate human behavior (e.g., social scoring).4

-

High-Risk: Applies strict legal requirements (data governance, transparency) to AI used in critical sectors (e.g., healthcare, employment).

-

Limited Risk: Covers systems like chatbots that must inform users they are interacting with an AI.

-

Generative AI Classification (Foundation Models): Models like GPT-4 or DALL-E are classified under specific transparency obligations.

Key Transparency Requirements for Generative AI (E.U. Focus)

The E.U. mandates two critical steps for platforms using or distributing Generative AI:

-

Transparency: Developers must clearly disclose that the content was generated by AI (Mandatory disclosure).5

-

Copyright Compliance: Models must publish a detailed summary of the copyrighted data used for training and establish robust systems to ensure the model does not infringe upon intellectual property (IP).6

2. The United States: Sector-Specific Regulation and Executive Order

The U.S. approach is less centralized, relying on a combination of executive actions, existing sector-specific laws, and potential future legislation:7

-

Executive Order (EO) Focus: President Biden’s Executive Order on AI (2023) prioritizes safety, security, and consumer protection.8 It mandates strict reporting requirements for developers of the most powerful foundation models (models surpassing a certain compute threshold, such as those above $10^{26}$ FLOPS) to the U.S. government.

-

Copyright and IP Battles: Regulation is heavily driven by ongoing landmark lawsuits concerning the use of copyrighted material for model training, which will set critical precedents for what constitutes fair use for generative purposes.9

Impact on Content Creation Platforms: The Compliance Challenge

Content creation platforms (CCPs)—which host user-generated content, including AI outputs—face immediate and complex compliance burdens under these new regulations. New Laws introduce both operational requirements and legal liabilities.

Managing Deepfakes and Synthetic Media

Both jurisdictions are converging on rules requiring the labeling of synthetic media (deepfakes).10

-

E.U. Liability: Under the AI Act, platforms may be held accountable for failing to ensure that generated content is appropriately labeled as “artificially generated” or “manipulated.”11

-

U.S. Focus: While not a unified law yet, the U.S. focus is on preventing election interference and financial fraud via synthetic media, leading to industry pressure for robust provenance and watermarking technologies to prevent misuse.12

Data Provenance and Licensing Costs

The most significant financial impact is tied to the data used for training.

-

E.U. Compliance Cost: The requirement to publish detailed summaries of training data sources and ensure IP compliance (as per the AI Act) will drastically increase the administrative and licensing costs for model developers.13 This cost will inevitably be passed down to the platforms licensing these models.

-

Transparency for Users: Platforms will need to develop new UI/UX standards to clearly indicate the source and potential IP status of any content generated by an integrated AI tool.

Future-Proofing Strategy: Mitigation and Governance

To ensure operational continuity and stability, platforms must adopt proactive governance strategies to anticipate future regulations.

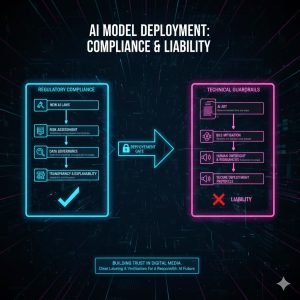

Adopting Technical Guardrails

Compliance in the future will be solved not just by legal documents, but by technology:

-

Input/Output Filtering: Implementing strict technical safeguards to prevent models from generating prohibited content (e.g., illegal deepfakes, hate speech) before publication.

-

Auditable Logging: Maintaining detailed, auditable logs of every generated piece of content, including the prompt, the model version used, and the date. This provides the necessary trail for regulatory audits.

The Role of Liability

The future of The Regulation of Generative AI hinges on defining where liability falls:

-

The “General Purpose” Model Provider: The E.U. is placing significant accountability on the developers of the foundational models (like OpenAI or Google), requiring them to perform risk assessments before deployment.14

-

The Downstream User/Platform: The platform that integrates the model also retains liability for how the content is used or how it fails to meet transparency requirements.

Final Verdict: The Compliance Imperative for Content Platforms

The transition from a lightly regulated environment to one governed by strict New Laws is already underway. The E.U. AI Act sets a global standard that U.S. platform operators cannot ignore, as regulatory harmonization often follows. Platforms that fail to invest now in robust data governance, auditable logging, and clear synthetic content labeling will face massive compliance hurdles and potential fines. Content Creation Platforms must prioritize transparency and technical safeguards to ensure a stable AI ecosystem and safeguard their long-term viability in this new regulatory reality.

| Evaluation Metric | Score (Out of 10.0) | Note/Rationale |

| Regulatory Clarity (EU AI Act) | 9.0 | High clarity due to a consolidated, risk-based framework and mandatory IP summaries. |

| Regulatory Clarity (US Approach) | 6.5 | Lower clarity due to reliance on executive orders, sector-specific laws, and ongoing litigation. |

| Platform Compliance Burden | 9.5 | High burden for platforms in both regions regarding IP, transparency, and labeling synthetic media. |

| Future-Proofing (Mitigation Investment) | 8.8 | Requires immediate investment in auditable logging, filtering, and model provenance systems. |

| Consumer/IP Protection Effectiveness | 9.2 | Regulation offers strong mechanisms to protect consumers from deepfakes and creators from IP infringement. |

| REALUSESCORE.COM FINAL SCORE | 8.6 / 10 | The weighted average reflects the high complexity and compliance cost of the current regulatory environment. |