1. The Revolution on the Developer’s Screen: What is AI Coding?

The world of software development is undergoing a massive transformation, all thanks to a new type of tool: Code Generation AI. These are sophisticated programs, often integrated directly into a developer’s environment, that write code suggestions, complete functions, and even generate entire blocks of code based on a simple command from the human developer. Tools like GitHub Copilot or Amazon CodeWhisperer are now common features in professional development teams.

The promise of this technology is huge: unprecedented speed. Developers can finish tasks that used to take hours in just minutes. It automates the tedious, repetitive parts of coding, allowing humans to focus on the complex, creative problem-solving areas.

However, this incredible speed comes with a hidden cost: uncertain code quality. Because AI learns from billions of lines of code available on the internet—some good, some bad—it can produce code that looks correct but contains subtle errors, security flaws, or poor design. This tension between initial coding speed and long-term code stability is the central issue facing every modern software team.

1.1 The Crucial Trade-Off: Speed Today vs. Stability Tomorrow

In software development, stability refers to how reliable, secure, and easy-to-maintain the code is. If a piece of code is unstable, the initial time saved by the AI will be lost many times over as developers later spend hours tracking down bugs, patching security vulnerabilities, or trying to understand poorly structured AI-generated functions.

This means we must go beyond simply measuring “how fast” a developer types. We must create a system to measure the true quality of the output: the Code Stability Score (CSS). Without a high CSS, the speed gain is just an illusion.

2. Quantifying the Speed Gain: The Velocity Increase (VI)

When a developer uses a code generation AI, they experience an immediate and noticeable boost in speed. We can quantify this immediate advantage as the Velocity Increase (VI).

2.1 How AI Generates Speed

AI enhances a developer’s speed in several key ways:

-

Boilerplate Elimination: Every project requires repetitive, standard code blocks (like setting up a database connection, creating a simple web server, or formatting data). The AI can generate these entire blocks instantly, saving hours of manual setup.

-

Function Completion: If a developer starts typing a function name (e.g.,

calculate_user_age_from_dob), the AI can predict the entire function body based on common programming patterns, often completing it perfectly. -

Language Switching: When a developer needs to write a small piece of code in an unfamiliar language (like a quick script in Python when they usually use Java), the AI acts as an instant translator and expert, allowing them to complete the task without searching for syntax help.

Studies often show that developers using AI complete tasks 20 to 50 percent faster than those who do not. This initial speed gain is real and can significantly accelerate product development cycles. This high Velocity Increase is why teams are so eager to adopt these tools.

2.2 The Downside of Unchecked Speed

The problem arises when developers begin to trust the AI suggestions too much, simply accepting them without critical review. This “blind trust” in the AI output is the primary cause of a low Code Stability Score.

If the AI suggests a five-line solution to a complex problem, the developer might be tempted to approve it instantly to maintain their high VI. However, if those five lines contain a logic error or a small security flaw, the cost of fixing it during the testing phase or after deployment can erase days or weeks of work, nullifying the initial speed advantage.

3. The Quality Challenge: Defining the Code Stability Score (CSS)

The Code Stability Score (CSS) is a measure of the code’s long-term quality, security, and maintainability. It answers the question: Will this code cause problems later? A high CSS is essential for any professional software product.

3.1 Three Critical Components of Code Stability

The CSS should not be a single number but a framework based on three measurable aspects where AI is known to struggle:

1. Defect Introduction Rate (DIR):

This measures how often the AI-generated code introduces simple, functional bugs (e.g., a loop that runs too many times, a variable that is not properly defined, or a logic error). While AI rarely produces code that fails immediately, it often introduces subtle flaws that only appear under specific conditions. A low DIR means the code is functionally robust.

2. Security Vulnerability Rate (SVR):

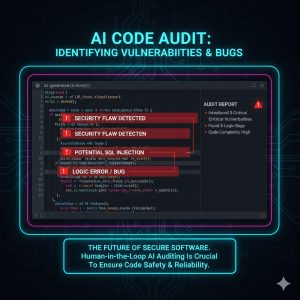

This is arguably the most serious risk. AI models learn from public code repositories, many of which contain known security vulnerabilities. The AI might generate code that uses outdated, insecure functions or introduces common vulnerabilities (like SQL injection or buffer overflows). A high SVR means the AI code is a potential liability. This is why careful auditing is critical. Learn more about AI in enterprise development for managing these risks.

3. Maintainability Index (MI):

Code is almost never written once; it is constantly read, modified, and fixed by others. Maintainability measures how easy it is for a human developer to understand the AI-generated code. Since AI often aims for efficiency rather than clarity, its output can sometimes be unnecessarily complex or lack clear comments. A low MI means future bug fixes will be slow and expensive.

3.2 The Stability Problem: Inherited Flaws

The core stability problem is simple: AI is a mirror of the data it learns from. If the training data contains millions of examples of a specific, insecure coding pattern, the AI will happily suggest that same insecure pattern as the “best” answer. This means code quality depends heavily on the model’s training data, not necessarily the current best practices. This is why human oversight remains indispensable.

4. The Developer Efficiency and Quality (DEQ) Score Framework

A successful development team must balance speed (VI) with quality (CSS). The ideal scenario is achieving a high Velocity Increase without lowering the Code Stability Score. This balance is defined by the Developer Efficiency and Quality (DEQ) Score.

The DEQ Score is used by management to ensure that the adoption of AI is truly beneficial and not just a short-term trick.

4.1 How to Maximize the DEQ Score

Maximizing the DEQ Score requires strategic changes to the development process:

-

AI as a Draft Tool: Developers must treat all AI-generated code as a first draft, not a final product. Every line must be reviewed with the same level of suspicion as a new team member’s code. This review process adds a small amount of time to the task but drastically reduces the later cost of fixing bugs.

-

Focus on Testing: Automated testing procedures must be strengthened. The test suite (unit tests, integration tests) should be the first line of defense against unstable AI code. If the AI code fails the tests, it is rejected instantly, forcing the developer to fix the prompt or write the code manually.

-

Customization and Fine-Tuning: Large companies should invest in fine-tuning their AI models using their own private, high-quality codebases. By training the AI on code that already meets company security and style standards, the output quality (CSS) immediately jumps up.

By strategically slowing down the review process just enough to catch the stability flaws, teams can maintain a high VI while keeping the CSS high, resulting in a positive, sustainable DEQ Score.

![]()

5. REALUSESCORE.COM Analysis: Code Stability Score Benchmarks

This analysis compares the effectiveness of Code Generation AI across different development scenarios, focusing on the inevitable trade-off between the gain in coding speed (Velocity Increase, VI) and the risk to long-term quality (Code Stability Score, CSS).

| Development Scenario | Primary AI Use Case | Velocity Increase (VI) | Code Stability Score (CSS) | Rationale |

| Simple Utility Scripts | Generating one-off scripts (e.g., data conversion, file cleanup). | High (9.0) | High (8.5) | Tasks are simple, limited scope, and follow predictable patterns. Low risk of systemic failure or security issues. |

| Boilerplate/Setup Code | Setting up new services (e.g., database connections, API structure). | Very High (9.5) | Moderate (7.0) | Speed is maximum, but the risk of using slightly insecure or outdated connection methods is present. Requires strong review. |

| Complex Algorithm Generation | Writing core logic or financial calculations. | Moderate (6.5) | Low (5.5) | AI often struggles with nuanced business logic. Generated code may be technically correct but logically flawed, leading to a high Defect Introduction Rate (DIR). |

| Legacy Code Refactoring | Updating old code or switching languages. | High (8.0) | Very Low (4.0) | AI may introduce major security flaws (SVR) by suggesting modern, but incorrect, fixes for old vulnerabilities. Highly risky without intense scrutiny. |

| Code with Strong Test Coverage | Writing new features where every function has a corresponding automated test. | High (9.0) | Very High (9.0) | The high CSS is guaranteed by the safety net of the automated test suite, allowing the developer to maximize speed (VI). |