💡 The Paradigm Shift From Chatbots to Autonomous Agents

The $2020\text{s}$ marked the rapid transition from simple Large Language Model LLM chatbots to complex, Agentic AI systems. These systems are capable of autonomous decision-making, planning, tool usage, and self-correction. They represent the next frontier in software development, enabling what we now call Autonomous Digital Workers.

To build and manage these complex systems, developers rely on specialized orchestration layers known as AI Agent Frameworks. The two dominant players in this space are LangChain and AutoGen. While both facilitate the creation of AI agents, they embody fundamentally different architectural philosophies. This pillar guide provides a deep dive into the state of these frameworks, their core mechanics, and the future ecosystem they are defining.

For a foundational understanding of this shift, explore our detailed analysis on the shift from simple conversational models to the creation of truly autonomous digital workers.

1. The Core Role and Architecture of Agent Frameworks

An AI Agent Framework serves as the operating system for LLMs, providing the necessary tools to connect the brain (the LLM) to the world (tools, data, memory).

The Four Pillars of Agent Architecture

-

Planning: Breaking down complex user goals into smaller, executable steps.

-

Memory: Storing past interactions, results, and long-term knowledge (vector databases).

-

Tool Use (Tool Orchestration): The ability to call external APIs or code interpreters (e.g., Python, Google Search).

-

Reflection and Self-Correction: Evaluating results and iterating on the plan.

LangChain and AutoGen both address these pillars but structure them differently, leading to distinct advantages in scalability and complexity.

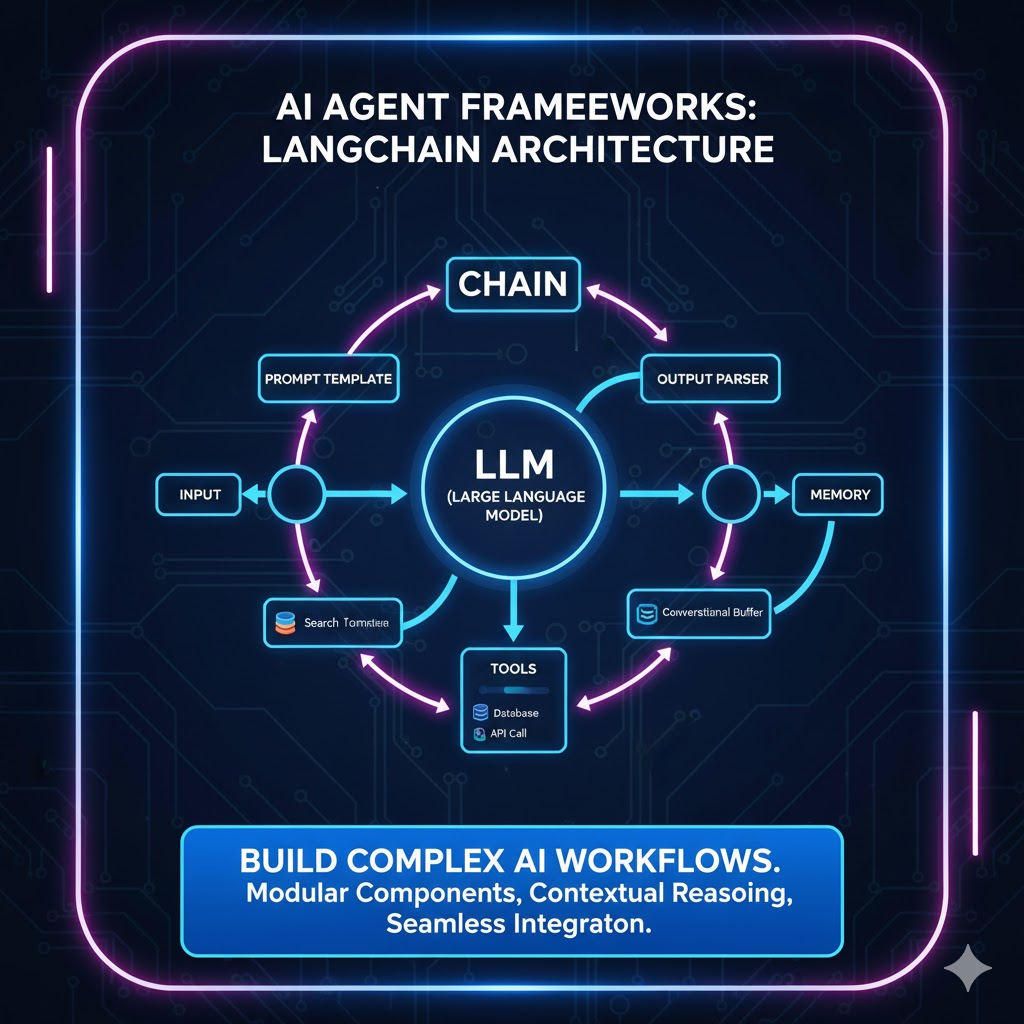

2. Deep Dive LangChain The Modular Toolkit

LangChain emerged as the first major framework, built around the principle of modularity. It provides a vast collection of composable components, allowing developers to construct complex sequences, known as “Chains.”

LangChain Architecture and Components

-

Chains: The sequential logic engine, defining the order in which steps are executed (e.g., “Search database” then “Summarize result” then “Format output”).

-

Retrieval Augmented Generation RAG: LangChain is the undisputed leader in RAG implementation. Its toolkit simplifies connecting documents (PDFs, internal wikis) to an LLM through loaders, splitters, and vector store integrations (e.g., Chroma, Pinecone).

-

Agents: In LangChain, an “Agent” is typically a single LLM loop that uses a defined set of tools to achieve a goal, relying on a central planning prompt.

-

Use Case Strength: Ideal for complex RAG pipelines, data extraction, and building single-agent workflows that require predictable, sequential execution.

LangChain Strengths and Weaknesses

| Aspect | Strength | Weakness |

| RAG/Data | Best in class component library for data loading, chunking, and vector database integration. | Complexity of managing many independent components can lead to maintenance overhead. |

| Modularity | Components are reusable across different projects, offering high flexibility. | The “Chains” can become rigid, making dynamic replanning for highly unpredictable tasks challenging. |

| Ecosystem | Massive community and integration support for virtually all LLMs and vector stores. | Performance can be hampered by the Python GIL Global Interpreter Lock during complex, concurrent tasks. |

3. Deep Dive AutoGen The Multi-Agent Conversation

AutoGen, developed by Microsoft, introduced a paradigm shift by modeling agent interactions not as sequential chains, but as conversational collaboration. This framework treats every component (including the user and the code interpreter) as a distinct AI Agent with assigned roles.

AutoGen Architecture and Collaboration

-

Configurable Agents: AutoGen allows developers to define a team of agents, each with specific skills (e.g., a “Coder,” a “Critic,” and a “User Proxy”).

-

Group Chat Mechanics: The core of AutoGen is the multi-agent group chat. Agents communicate asynchronously, debating solutions, executing code, and providing feedback until a consensus is reached, closely mimicking a human development team.

-

User Proxy Agent: The User Proxy is a unique AutoGen feature that acts as a human stand-in. It allows the multi-agent system to ask the user for confirmation or clarification before executing potentially risky code.

-

Use Case Strength: Superior for complex problem solving that requires iterative code generation, debugging, and dynamic collaboration, such as data science tasks, software development, and technical troubleshooting.

AutoGen Strengths and Weaknesses

| Aspect | Strength | Weakness |

| Multi-Agent | Unmatched framework for collaborative problem-solving and dynamic, complex reasoning. | Can be resource intensive; managing multiple simultaneous LLM calls is costly and time consuming. |

| Code Execution | Excellent, safe environment for code execution and debugging within the agent team loop. | Documentation and community support are less mature and broad than LangChain s, resulting in a steeper learning curve. |

| Dynamic Flow | Agents can dynamically change roles and strategies during the conversation, enhancing robustness. | Results can be less predictable than LangChain’s defined chains, making quality assurance QA harder. |

4. Framework Showdown Comparison Table

To aid in framework selection, we compare the key technical differences between these two titans.

| Feature | LangChain | AutoGen | Preferred Use Case |

| Core Paradigm | Chains Sequential Steps | Conversation Multi Agent | RAG and Data Processing |

| Tool Orchestration | Tool Calling API Wrappers | Coder Agent and User Proxy | Software Development, Debugging |

| RAG Implementation | Highly Modular and Component Based | Integrated as a specialized agent or tool within the conversation. | LangChain (Clear Winner) |

| Scalability Focus | Scalability via external systems and defined components. | Scalability via increasing the number of collaborating agents. | AutoGen (Complex, Dynamic Tasks) |

| Learning Curve | Moderate (Extensive documentation) | Steep (Requires understanding agent communication protocols) | LangChain (Faster onboarding) |

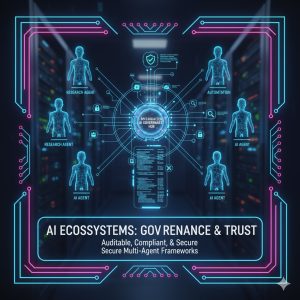

5. The Future Ecosystems Governance and Specialization

The future of AI Agent Frameworks is not simply about which one wins, but how the entire ecosystem evolves, focusing on specialization and necessary guardrails.

Governance and Safety

As agents become more autonomous, safety and governance become paramount. Frameworks are rapidly integrating modules for:

-

Risk Mitigation: Preventing agents from executing malicious or unauthorized code.

-

Audit Trails: Detailed logging of every decision, tool call, and LLM prompt for regulatory compliance and debugging.

-

Human-in-the-Loop HIML: AutoGen s User Proxy is an early example. Future frameworks will mandate human review points for critical decisions, particularly in finance or medical fields.

Specialization and Verticalization

The trend is moving away from general-purpose frameworks towards specialized verticalized platforms.

-

Data Agent Frameworks: Focused entirely on SQL query generation, data cleaning, and visualization.

-

Cybersecurity Agents: Designed for threat detection and automated penetration testing.

-

Hardware Integration: Frameworks are beginning to integrate lower-level communication for specialized hardware accelerators, such as those covered in our guide to budget gaming hardware, to optimize performance for on-device AI tasks.

This specialization means that developers will likely use components from both LangChain and AutoGen, wrapped within a higher-level, task-specific platform.

✅ Conclusion Choosing Your Framework

The choice between LangChain and AutoGen should be driven entirely by the task at hand.

-

Choose LangChain if: Your primary challenge is integrating diverse data sources RAG, building structured data pipelines, and you require high predictability from your agent’s execution path. It is the best choice for data-centric projects.

-

Choose AutoGen if: Your problem requires complex, iterative planning, code execution, internal debugging, and you need a system that can dynamically self-correct through conversation. It is the best choice for development and research tasks.

Ultimately, the competitive advantage in the 2026 AI ecosystem belongs to the developer who can skillfully leverage the strengths of both frameworks, integrating them into robust, audited, and highly specialized Autonomous Digital Workers.