1. The Imperative for AI Governance in the Age of Regulation

The Rise of the Black Box Risk

The explosive growth of Artificial Intelligence (AI) deployment across sensitive domains—from financial credit scoring and healthcare diagnostics to public safety and automated hiring—has rendered the concept of the “black box” unacceptable. A black-box AI system, where the input and output are known but the internal decision-making process is opaque, poses profound risks: it can silently propagate societal biases, lead to unfair or discriminatory outcomes, and make regulatory compliance impossible. This opacity is the single greatest threat to enterprise trust and accountability. The lack of visibility into an algorithm’s logic shifts the focus from innovation to liability. For large organizations, AI is no longer a fringe IT project; it is a fiduciary responsibility.

Global Compliance and the Regulatory Mandate

Regulatory bodies worldwide, including the European Union with its landmark EU AI Act, the US with the NIST AI Risk Management Framework (AI RMF), and various national data protection acts, are moving decisively to mandate clear standards for AI trustworthiness. These laws collectively define a new reality: governance is no longer optional—it is a regulatory prerequisite for operating AI systems, especially those categorized as “high-risk.”

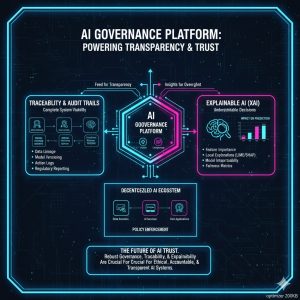

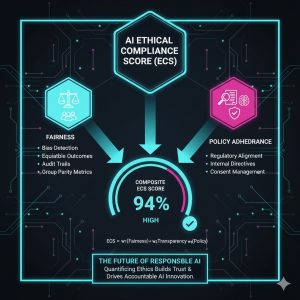

Compliance requires verifiable evidence across three core ethical pillars: Fairness and Non-Discrimination, Accountability and Human Oversight, and, critically, Transparency and Explainability (XAI). The core function of an AI Governance Platform (AIGP) is to transform these abstract principles into measurable, auditable, and operational technical controls, ultimately driving up an organization’s Ethical Compliance Score.

2. Key Components of the Ethical AI Governance Platform (AIGP)

The AI Governance Platform serves as the central control plane, unifying policies, technical guardrails, and reporting mechanisms across the entire AI lifecycle, from data ingestion to model deprecation. Its architecture is built upon three pillars designed to enforce system transparency and ethical alignment.

Pillar 1: Model and Data Lineage Traceability

The foundation of transparency is Traceability. If an organization cannot prove where its data came from or how its model was trained, it cannot prove compliance. The AIGP must provide immutable audit trails for every component of the AI system.

-

Data Provenance and Quality Management: The platform tracks the source, modifications, and quality metrics of all training datasets. This allows auditors to verify that data is legally sourced (e.g., GDPR-compliant) and free from systematic biases that would lead to discriminatory outcomes.

-

Model Versioning and Lifecycle: It manages different versions of the AI model, documenting every change in parameters, hyperparameters, and fine-tuning steps. This provides a clear audit trail, linking any specific output to the exact version of the model that generated it, a non-negotiable requirement for regulatory scrutiny.

-

Artifact Store and Metadata: The platform centralizes all model artifacts, code, and documentation, ensuring that the full developmental history is accessible and that the rationale behind every design choice is documented.

Pillar 2: Explainable AI (XAI) and Interpretability Tools

Traceability proves what happened; Explainability proves why. The AIGP must integrate XAI tools that demystify complex “black-box” models (like deep neural networks) to provide human-understandable explanations for their decisions.

-

Local Explainability (SHAP/LIME): Tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are essential. They generate case-by-case explanations for a single decision, allowing a loan officer or a doctor to tell a customer why the AI made a specific recommendation. This is crucial for maintaining human-in-the-loop decision-making.

-

Global Interpretability: The platform provides summaries of the model’s overall behavior, identifying the most influential features across all decisions. This helps data scientists and compliance officers understand the general principles the model has learned, ensuring they align with policy.

-

Counterfactual Explanations: The platform generates explanations that describe the minimum changes needed in the input data to change the outcome (e.g., “If your income was $10,000 higher, your loan application would have been approved”). This is the gold standard for fairness and transparency, empowering users to challenge decisions and understand recourse.

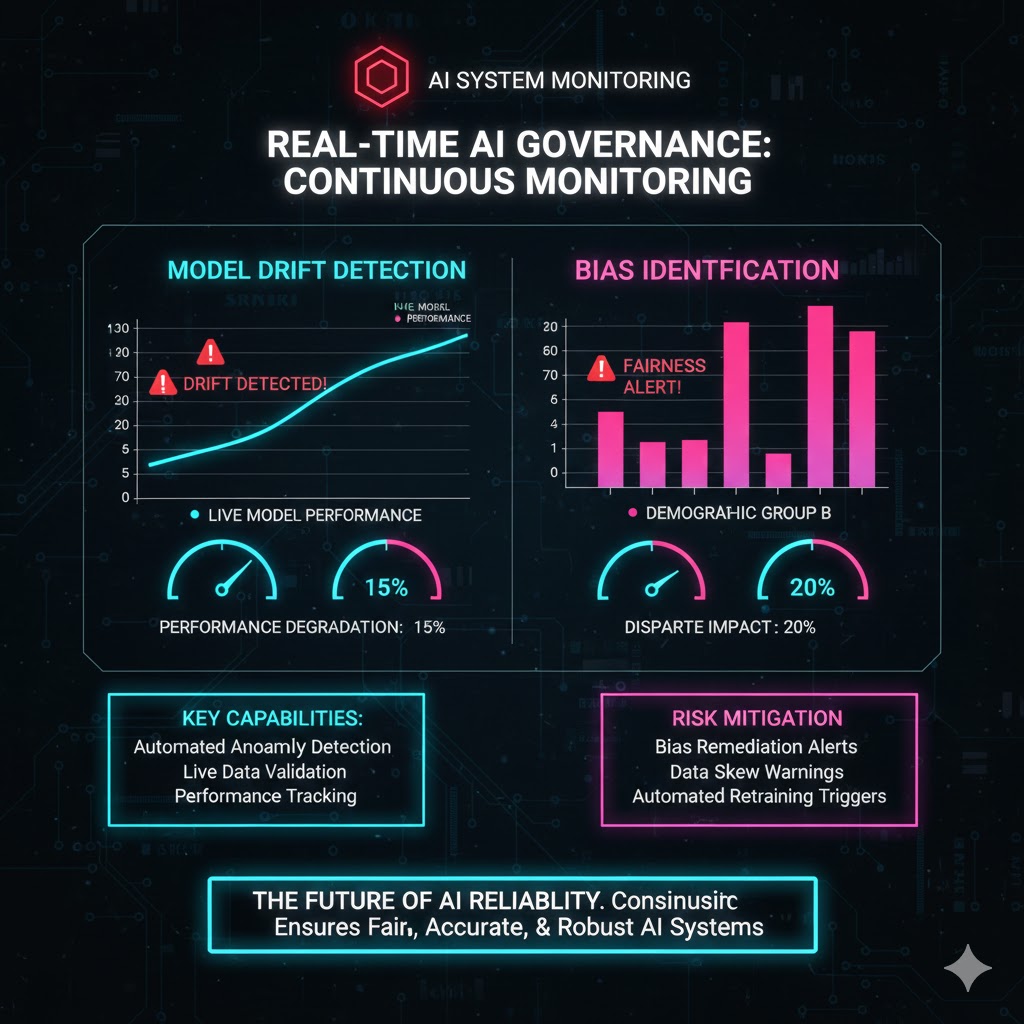

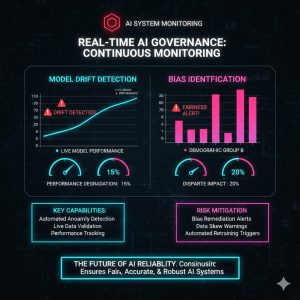

Pillar 3: Continuous Monitoring and Automated Risk Scoring

Ethical compliance is not a one-time check but a continuous operational process. AI models can drift over time, degrading in performance or becoming biased due to changing real-world data. The AIGP must incorporate real-time monitoring to detect and alert on these ethical failures.

-

Bias and Fairness Monitoring: The platform automatically monitors key fairness metrics (e.g., Equal Opportunity Difference, Statistical Parity Difference) across protected demographic groups (where legally permissible) to identify and alert if the model begins to exhibit discriminatory behavior in production.

-

Performance and Drift Detection: It tracks model performance against human-labeled benchmarks and alerts when accuracy drops or when the relationship between inputs and outputs changes (concept drift), indicating a potential operational failure.

-

Automated Risk Scoring: The AIGP assigns a real-time, quantitative Ethical Compliance Score (ECS) to every active model. This score aggregates metrics from fairness, transparency, and adherence to regulatory policy, providing executives with a clear, single indicator of risk exposure. This automated scoring mechanism is the system’s most powerful tool for proactive risk management.

3. Measuring Success: The Ethical Compliance Score (ECS) Framework

The core deliverable of a functional AI Governance Platform is a quantifiable score that summarizes the system’s adherence to ethical and legal mandates. The Ethical Compliance Score (ECS) moves AI ethics from abstract philosophy to measurable, auditable FinOps (Financial Operations) risk management.

ECS Calculation Components

The ECS is a weighted index, combining scores from the following technical and policy dimensions:

| Dimension | Weight | Measurement Metric | Purpose |

| I. Transparency & Explainability | 40% | XAI Coverage Index | Measures the percentage of decisions for which a human-readable explanation is automatically generated and recorded. Higher index = Higher Transparency. |

| II. Fairness & Bias Mitigation | 35% | Bias Disparity Gap (Delta_B) | The maximum statistical difference in outcomes (e.g., False Positive Rate) between the most- and least-favored protected groups. Low Delta_B = High Fairness. |

| III. Regulatory Adherence | 15% | Policy Audit Pass Rate | Percentage of mandatory governance checkpoints (e.g., data source validation, human override log) passed in the last audit cycle. |

| IV. Accountability & Oversight | 10% | Human Override Rate (H.O.R.) | Tracks human intervention/escalation rate. A high H.O.R. can flag a model needing re-training; a zero H.O.R. can flag a lack of human oversight. |

Driving Compliance through the ECS

A simple dashboard displaying the ECS transforms ethical risk management. Executive leadership gains an immediate, continuous view of their enterprise’s exposure. A model operating with an ECS below an organizational threshold (e.g., below 75%) is automatically flagged for mandatory intervention: either Human-in-the-Loop intervention is escalated, or the model is pulled from production until retraining and re-validation are complete. This operational feedback loop ensures that the platform is not just a reporting tool but an active, policy-enforcing component of the AI architecture.

The emphasis on this quantifiable scoring system is mirrored by broader industry efforts to manage risk, such as those detailed in articles analyzing how companies utilize operational metrics to govern other forms of risk, including the financial viability of new AI business models. For further insights on how financial models and governance intersect, refer to the analysis on Small Model LLM Cost Efficiency Score: The 90% Operational Reduction Strategy for 2026.

4. Strategic Implementation and Future-Proofing

Organizational Integration: The Central AI Ethics Committee

The AIGP is only as effective as the organizational structure supporting it. Successful deployment requires the establishment of a Cross-Functional AI Ethics Committee (or AI Review Board) composed of legal, compliance, data science, and business unit leaders. This committee’s primary role is to define the specific ECS thresholds, interpret the Bias Disparity Gap (Delta_B) in the context of business objectives, and adjudicate high-risk escalations. The AIGP provides the data, but the committee provides the human judgment and accountability.

The Future of Regulatory APIs

The next generation of AI governance platforms will move from simply reporting compliance scores to enforcing compliance in real-time via API. Imagine a future where regulatory bodies or partners can directly query a standardized, secure API to receive a real-time System Transparency Score before engaging in a high-risk transaction. This level of automated, verifiable trust will become a major competitive differentiator, making the AIGP an integral part of the business transaction layer itself.

The move to platform-based, scored governance ensures that organizations are not just reacting to regulation but proactively building trustworthy, sustainable AI systems that benefit both the bottom line and society.

REALUSESCORE.COM Analysis Score

This score evaluates the AI Ethics Governance Platform (AIGP) as a necessary enterprise solution for achieving mandatory compliance, mitigating systemic risk, and building trust through verifiable transparency.

| Evaluation Metric | Max. Potential Impact | AIGP Solution Score (Out of 10) | Rationale |

| System Transparency (XAI) | Full Model Auditability | 9.6 | Integrates XAI tools (SHAP/LIME) and comprehensive lineage tracing, eliminating the black box for audit purposes. |

| Ethical Compliance (Regulatory) | Full Adherence (EU AI Act/NIST) | 9.5 | Provides mandatory audit trails, continuous fairness monitoring, and a quantifiable risk score (ECS) for proactive reporting. |

| Risk Mitigation (Operational) | Automated Drift/Bias Detection | 9.3 | Automated monitoring prevents model drift and bias from degrading performance in production, minimizing legal and reputational damage. |

| Accountability & Governance | Clear Role Definition & Logging | 9.0 | Centralized logging of human overrides and decisions, ensuring clear accountability for all AI-driven outcomes. |

| Long-Term Trust & Stakeholder Value | Brand Reputation & Adoption | 9.4 | Demonstrable transparency builds consumer and partner trust, critical for wide-scale, high-stakes AI adoption. |

The AI Ethics Governance Platform is not an optional tool but a mandatory infrastructure layer that translates ethical concepts into measurable, auditable data, safeguarding the enterprise’s future in a highly regulated AI ecosystem.