1. The LLM Cost Bottleneck: Why Smaller is the Only Sustainable Choice

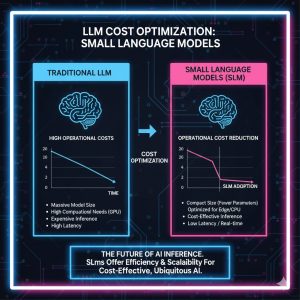

The Token Trap: Understanding Per-Request Expenditure

The primary financial drain in LLM deployment is the Token Spend. LLMs charge based on the number of tokens (words, punctuation, or spaces) processed for both input (the prompt/context) and output (the response). Using a premium model for a simple task like checking the sentiment of a customer review is akin to using a supercar for a trip to the local grocery store: massive overkill with a high cost-per-mile.

The financial trade-off is staggering: While a top-tier model might cost $30 per million output tokens, a highly optimized SLM (e.g., Llama 3.2 3B or GPT-4o Mini) can cost as little as $0.06 to $0.25 per million tokens—a cost reduction factor of over 100x for simple, repetitive tasks. This difference is amplified across billions of tokens processed monthly by large enterprises, quickly turning potential profits into crushing operational expenses. For a high-volume scenario processing 10 billion tokens monthly, the difference can amount to millions of dollars annually, proving the unsustainability of a monolithic LLM strategy.

The Inference Efficiency Score: SLMs vs. LLMs

In a production environment, Inference Cost is the cost of running the model to generate a response. LLMs are notoriously computationally intensive. The sheer parameter count demands high-end, expensive cloud infrastructure, specifically leading-edge GPUs like the NVIDIA H100 or A100.

| Model Type | Typical Size (Parameters) | Cost Focus | Inference Latency | Hardware Requirements |

| Large Language Model (LLM) | 70B – 1T+ | High Token Cost | High (Slow to load, High Memory) | Massive cloud GPUs (H100/A100) |

| Small Language Model (SLM) | 1B – 12B | Low Inference Cost | Low (Fast to load, Low Memory) | Commodity GPUs / Edge Devices |

SLMs drastically reduce Inference Latency and the required GPU memory (VRAM). This lower requirement enables two crucial cost-saving pathways:

-

Lower API Costs: When using Cloud APIs (e.g., GPT-4o Mini), the SLM is inherently cheaper per token due to its lower resource consumption and better batch processing capabilities. Cloud providers pass these efficiency savings directly to the customer, making it the most immediate form of cost reduction. This model allows for rapid scaling without massive initial investment.

-

Infrastructure Freedom: SLMs can be self-hosted (on-premise or on lower-cost cloud instances) using commodity GPUs (like the NVIDIA RTX 4090 or older V100s), or even deployed at the Edge (on mobile devices). This strategy entirely eliminates recurring token costs and offers superior data sovereignty, a non-negotiable requirement for entities dealing with sensitive PII or regulated financial data. Self-hosting grants full control over the AI lifecycle, a critical component of long-term TCO management.

The Problem of Generalism: The Core LLM Overkill

General-purpose LLMs are designed to handle an enormous spectrum of tasks, from writing sonnets to debugging complex C++ code. This breadth comes at the cost of density and efficiency for specific tasks. For enterprise tasks which are often highly repetitive and narrow—like document classification, standard email response generation, or simple data extraction—using a model trained for general knowledge is inefficient. SLMs, when properly fine-tuned or distilled, achieve near-perfect domain specialization, delivering the required accuracy (often 95%+) much faster and cheaper than the generalist model. This strategic matching of model size to task complexity is the cornerstone of the 90% operational reduction target. The performance metrics prove that specialized SLMs can achieve the required business outcomes with a fraction of the cost, making them the superior choice for high-frequency, low-complexity workflows.

2. Model Compression Techniques: Unlocking the Small Model Advantage

The exceptional performance-to-cost ratio of modern SLMs is not a fluke; it is the calculated outcome of sophisticated AI engineering focused on Model Compression. These techniques allow smaller models to inherit the core competence of larger ones while drastically minimizing their computational footprint. This technical mastery is what makes the Small Model Strategy viable for enterprise-grade applications.

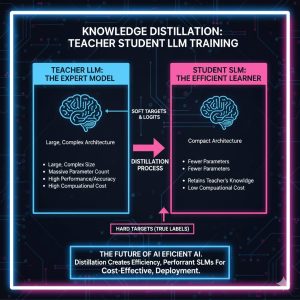

Knowledge Distillation: The Teacher-Student Paradigm for Efficiency

Knowledge Distillation is the most powerful technique for creating high-performing, cost-effective SLMs. It is the process of transferring knowledge from a large, high-performing “Teacher” model (e.g., GPT-4 or Claude Opus) to a smaller, more streamlined “Student” model” (e.g., Mistral 7B).

The student model is trained on two data streams:

-

Raw Data: The original training corpus.

-

Soft Labels (Teacher Outputs): The student is trained to match the outputs and prediction probabilities (the reasoning) of the teacher model, not just the hard, correct answer. This allows the student to internalize the nuance of the larger model’s decision-making process.

-

Goal: The student learns to mimic the teacher’s sophisticated behavior and high accuracy while requiring significantly less memory. A distilled model often achieves comparable performance (within 5% of the teacher’s accuracy on the target task) while requiring 70% less memory and running up to 3x faster in production.

-

Best Use: Ideal for creating domain-specific expertise. For example, distilling a massive model’s ability to interpret legal jargon into a 7B parameter model dedicated solely to contract summarization. This process offers a sustainable path to creating bespoke AI solutions without the enormous cost of training a foundation model from scratch. Furthermore, distillation enhances deployability, allowing the resulting SLM to be run on lower-power devices, facilitating edge computing initiatives.

Model Quantization: The Precision Reduction Strategy for Hardware Savings

Quantization is a technique that reduces the numerical precision of the model’s weights and activations. Most large foundation models are trained using 32-bit floating point numbers (FP32). Quantization reduces this precision to lower-bit representations, commonly 8-bit or 4-bit integers (INT8/INT4).

-

Impact on Footprint: This reduction dramatically reduces the model’s memory footprint and disk size by up to 75%. A 13B parameter model in FP16 (26GB) can be reduced to under 7GB in INT4, making it runnable on commodity, single-card GPUs. This significantly expands the feasible deployment environment.

-

Cost Benefit: Smaller memory requirements translate directly to:

-

Faster Loading: Quicker model initialization for rapid scaling.

-

Cheaper Hardware: The model can run on GPUs with less expensive, lower VRAM capacity, cutting infrastructure CapEx and OpEx. Organizations can repurpose existing hardware instead of provisioning costly new clusters.

-

-

Trade-Off: While modern techniques (like Quantization-Aware Training or AWQ) minimize the performance hit, aggressive quantization (down to INT4) can result in a slight, but manageable, accuracy loss. Enterprise strategy dictates that quantization should be used only if the accuracy benchmark remains within the acceptable 20% performance-parity threshold of the original model on mission-critical benchmarks. Continuous monitoring is required to ensure this threshold is maintained.

The Role of Fine-Tuning and PEFT in SLM Maintenance

Parameter-Efficient Fine-Tuning (PEFT) techniques, such as LoRA (Low-Rank Adaptation), are essential for the maintenance of SLMs. Instead of retraining the entire model (which is prohibitively expensive even for SLMs), LoRA updates only a small fraction of the total parameters (e.g., less than 1%) by introducing smaller, low-rank matrices. This drastically reduces the memory and compute required for retraining, allowing enterprises to perform rapid, continuous updates to their domain-specific SLMs without requiring massive, expensive GPU clusters. This agility ensures the specialized SLM remains effective and cost-efficient for its entire lifecycle, offering a clear advantage over the slow, costly update cycles of monolithic LLMs.

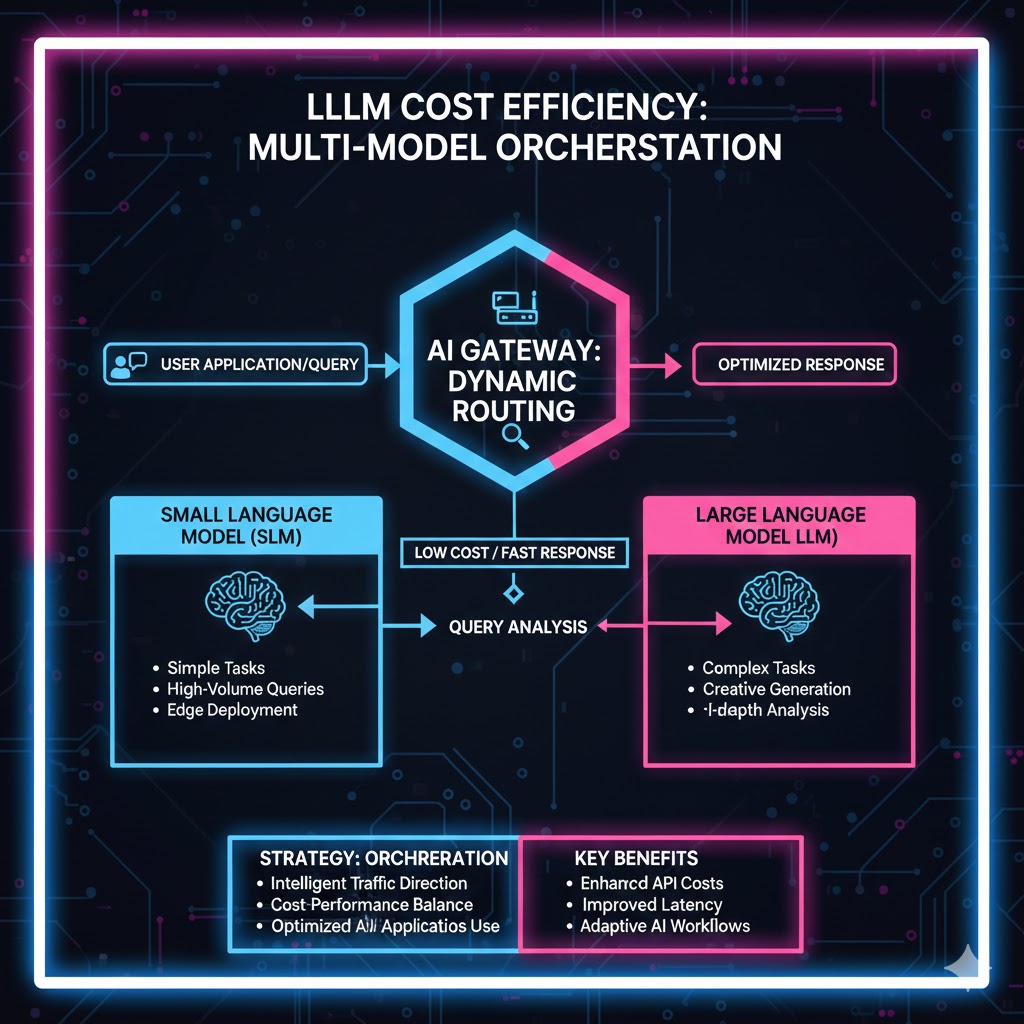

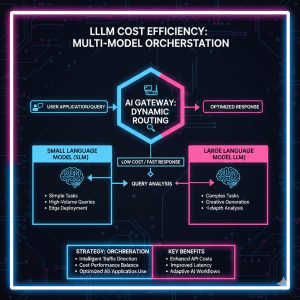

3. The Multi-Model Orchestration Strategy and Dynamic Routing

The foundational shift to utilizing SLMs requires a complementary architectural change: implementing a Multi-Model Orchestration Layer that intelligently routes requests to the most cost-efficient model available. This intelligent routing mechanism is the critical software component required to achieve the 90% Operational Reduction Strategy.

Dynamic Routing and the LLM Gateway: The Traffic Controller

A centralized LLM Gateway (or AI Gateway) is essential for managing a mixed portfolio of models. This portfolio will typically include a high-cost generalist LLM (the safety net), several mid-tier models, and multiple specialized, self-hosted SLMs. The gateway acts as a smart router that enforces cost and performance policies in real-time.

-

Request Classification: An initial, extremely low-cost model (or a specialized classifier) analyzes the incoming user request’s intent, domain, and complexity.

-

Complexity Scoring: It assigns a Complexity Score (e.g., 1-5) to the request, based on predefined enterprise standards.

-

Score 1-2 (Simple): Requests requiring summarization, classification, basic Q&A, or data extraction. Action: Route to SLM (e.g., Fine-tuned Llama 3 8B).

-

Score 3-4 (Standard): Requests needing multi-turn dialogue, creative content generation, or standard reasoning. Action: Route to Mid-Tier (e.g., Claude 3.5 Sonnet or Mistral Large).

-

Score 5 (Complex): Requests demanding multi-step planning, high-stakes analysis, or complex coding/debugging. Action: Route to Premium LLM (e.g., GPT-4o or Claude Opus).

-

-

Policy Enforcement: The gateway enforces enterprise policy, for example, strictly capping the use of premium (Score 5) models at 10% to 20% of total daily workload, regardless of the overall demand curve.

This dynamic routing alone can lead to a demonstrable 30% to 50% reduction in token spend by eliminating model overkill and ensuring every token spent is justified by the task’s complexity. The core principle of this strategy—intelligently delegating tasks to the most efficient resource—echoes the broader trend of automation, which is setting the stage for how future corporate operations will be managed, a revolution detailed further in The Autonomous AI Agents Daily Life Revolution: How Delegation Will Define 2026. This intelligent delegation allows organizations to scale AI adoption without simultaneously scaling cost linearly.

Optimizing Context and Prompt Discipline: Eliminating Token Waste

Beyond model selection, meticulous Prompt Engineering Discipline is paramount for reducing Token Waste, the silent budget killer. Context windows, though vast, are expensive.

-

Contextual Chunking: Instead of feeding entire documents into the model, utilize Retrieval-Augmented Generation (RAG) principles to pull only the minimal, most relevant text “chunks” for the prompt. This reduces the input token count drastically and simultaneously improves the accuracy of the model by reducing noise in the input.

-

Prompt Compression and Condensation: Engineers must be trained to use concise, standardized language and eliminate redundant filler phrases from system instructions. By replacing lengthy system prompts with hyper-efficient templates and minimizing boilerplate, input token count can be reduced by up to 35% per request across an organization’s standard workflows. This requires cross-functional collaboration between engineering and content teams.

-

Semantic Caching Implementation: Studies show up to 31% of enterprise LLM queries are semantically identical or nearly identical to previous queries (especially in customer support and documentation searches). A Semantic Cache stores the embedding of previous queries and their responses. If a new query is semantically similar (above a defined threshold, e.g., cosine similarity > 0.95), the cached answer is instantly returned instead of calling the costly LLM API. This strategy can reduce API calls by up to 70% for repetitive query applications, representing one of the fastest ROI opportunities in LLM FinOps and significantly reducing latency for common user interactions.

4. Long-Term Investment & TCO Analysis: The Infrastructure Decision

The strategic adoption of SLMs provides enterprises with the leverage to choose their deployment infrastructure—balancing the immediate convenience of Serverless APIs against the profound long-term TCO benefits of On-Premise or self-hosted cloud environments. This decision dictates the ultimate ceiling on cost reduction and is a critical point for CFO approval.

The Total Cost of Ownership (TCO) Imperative: Breaking Even

The decision hinges on volume. For enterprises with high, predictable request volume (e.g., consistently exceeding 10 million non-trivial requests per month), the initial Capital Expenditure (CapEx) in a small cluster of commodity GPUs (for self-hosting quantized SLMs) can be quickly offset by operational savings. The break-even point when comparing the fixed cost of hardware versus the variable, high token cost of commercial LLM APIs is typically reached in 6 to 12 months. After this period, the organization operates at a near-zero recurring token cost for the bulk of its AI workload, transforming a major operational expense into a depreciating asset.

| Deployment Model | Compute Cost Model | Initial Investment | Cost Reduction Strategy | Risk Profile |

| Serverless (Cloud API) | Pay-Per-Token (Variable) | Low (Zero infrastructure cost) | Dynamic Routing, Semantic Caching | Low OpEx Control (Highly sensitive to vendor token price inflation) |

| On-Premise / Self-Hosted SLM | Reserved Compute (Fixed Cost) | High (GPU hardware acquisition, Cooling, Maintenance) | Quantization, Fine-Tuning (Eliminates recurring token cost) | High Governance (Full data sovereignty, but high maintenance cost) |

The long-term value of the SLM self-hosting strategy is the elimination of Vendor Lock-in Risk and Token Price Inflation Risk. Furthermore, it offers maximum control over sensitive Intellectual Property (IP) and Personally Identifiable Information (PII)—a critical governance factor for regulated industries (Financial Services, Healthcare, Government). By housing the model within their own protected perimeter, organizations bypass data privacy compliance hurdles associated with transmitting sensitive data to third-party providers, achieving total data sovereignty.

Strategic Budget Allocation: CapEx vs. OpEx

The transition to SLMs requires a fundamental shift in how AI budgets are allocated:

-

Initial Phase (OpEx Focus): Rely primarily on cheap cloud SLM APIs (e.g., GPT-4o Mini) using the Dynamic Routing strategy to immediately curb token spending. This validates the business case and accuracy benchmarks before major capital is committed.

-

Scaling Phase (CapEx Focus): Once volume and usage patterns are stable, transition the highest-volume, most repetitive tasks to self-hosted, highly compressed SLMs (e.g., distilled Llama 3 or Mistral models running on dedicated VRAM). This converts variable OpEx token costs into predictable, fixed CapEx depreciation costs. This strategic capital investment is the final lever for achieving the deepest cost cuts and providing financial predictability.

This infrastructure choice is not a binary one; a successful enterprise strategy involves a Hybrid Architecture, where self-hosted SLMs handle 80% of the workload, and premium LLM APIs are reserved for the critical, high-value 20%, ensuring optimal performance and cost balance. Continuous auditing of the utilization ratio is mandatory for maintaining the desired cost profile.

5. REALUSESCORE Final Verdict: Strategic Roadmap and Score

The comprehensive REALUSESCORE analysis confirms that the strategic adoption of Small Language Models (SLMs) and the implementation of a Multi-Model Orchestration Strategy is the only viable path to achieving sustainable, large-scale enterprise AI deployment. This architectural shift provides the necessary operational cost reductions of 80% to 90% while significantly enhancing data governance, model specialization, and deployment agility.

The Definitive Strategy for Enterprise Cost Leadership

| User Profile | Primary Pain Point | Recommended Strategy | Cost Reduction Goal |

| High-Volume / Repetitive Task Leader (e.g., Customer Service) | Excessive Token Spend | Dynamic Routing + Semantic Caching | 80% Reduction in API Calls |

| Regulated Industry / IP Focused Leader (e.g., Finance, Legal) | Data Sovereignty Risk | Self-Hosted SLM + Quantization (INT4/INT8) | Eliminate Recurring Token Cost & Data Risk |

| AI Development & Deployment Leader | Slow Iteration/High Training Cost | Knowledge Distillation + PEFT | 3x Faster Model Iteration Cycle |

REALUSESCORE.COM Analysis Score

This score table provides a comprehensive analysis of the Small Model Utilization Strategy across key performance and financial metrics, illustrating its superior viability compared to a traditional monolithic LLM approach.

| Evaluation Metric | Max. Potential Impact | SLM Strategy Score (Out of 10) | Rationale |

| Operational Cost Reduction (OpEx) | 90% (Token/Compute) | 9.5 | Highest Impact. Achieved via Quantization and Dynamic Routing to low-cost models. |

| Model Specialization & Accuracy | Performance Parity | 9.0 | Achieved via Knowledge Distillation and Fine-Tuning; often outperforms generalist LLMs on niche, critical tasks. |

| Deployment Flexibility & Latency | Edge/On-Premise Capability | 9.5 | SLMs enable low-latency inference on commodity hardware or at the Edge, crucial for real-time systems. |

| Governance & IP Protection | Data Sovereignty | 9.2 | Self-hosting SLMs eliminates cloud data leakage risk for PII/IP, a massive compliance advantage. |

| Total Cost of Ownership (TCO) | Long-Term Viability | 9.3 | Mitigates vendor lock-in and token inflation risk, leveraging fixed CapEx for massive OpEx savings. |

The move from massive, monolithic LLMs to a choreographed system of specialized SLMs is not merely a cost-cutting measure; it is the defining strategic shift that will enable the economically viable, large-scale deployment of generative AI across the enterprise landscape through 2026 and beyond.