The Bifurcation of Trust: LLMs as Both Critical Threat and Ultimate Defense

The rise of Large Language Models (LLMs) represents the single greatest inflection point in AI and Data Security since the advent of the internet. These sophisticated models, while driving unprecedented productivity, have simultaneously opened an entirely new, complex frontier of Cyber Threats. The focus has shifted from protecting network perimeters to securing the data and algorithms within the AI itself. For data security professionals and corporate executives, the challenge is clear: mastering the defense mechanisms required to prevent catastrophic data leakage and exploitation, especially as LLMs become embedded in every layer of enterprise operation. This comprehensive analysis delves into the technical vulnerabilities and the cutting-edge, AI-powered defenses necessary to survive this new era.

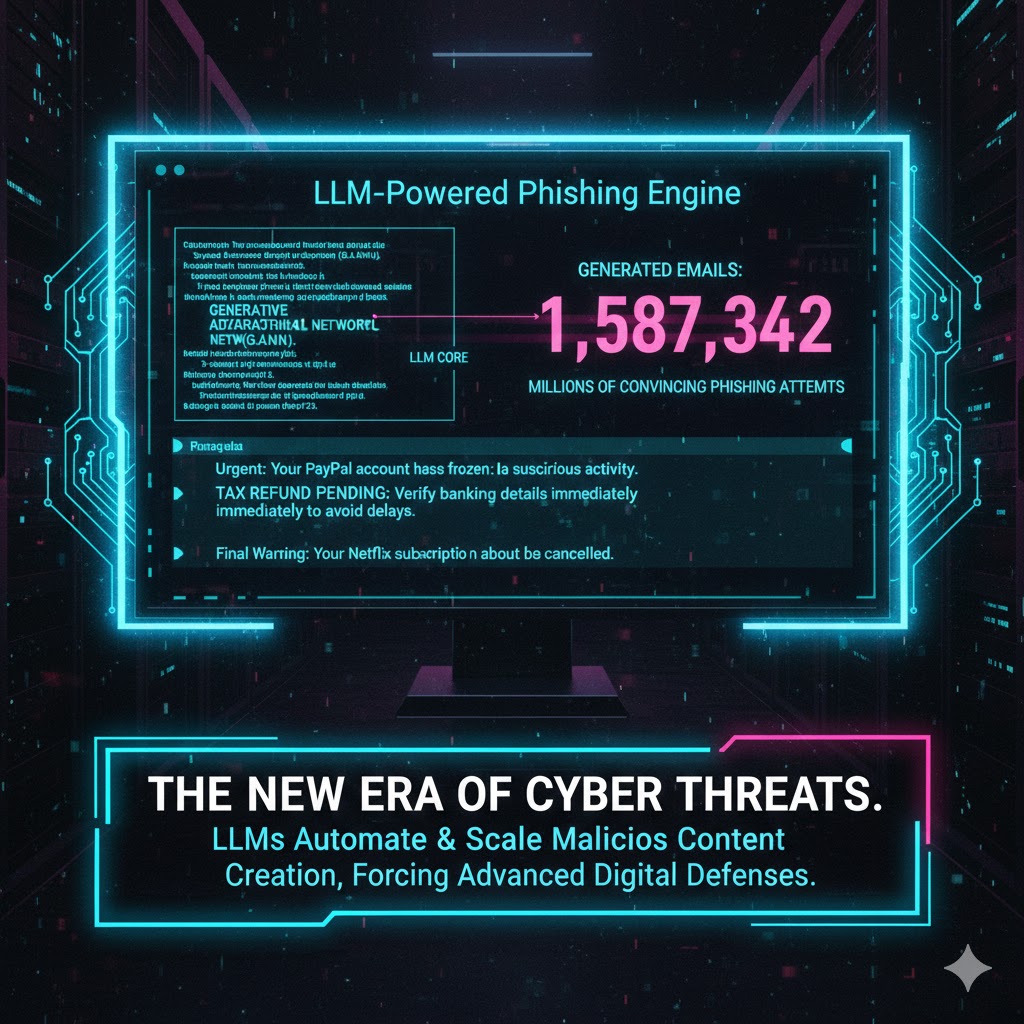

1. LLMs as a New Threat Vector: Automated Attack Surface

LLMs possess unique characteristics that make them exceptionally potent tools for scaling and accelerating malicious cyber campaigns. The threat is not simply from the AI, but through it.

Prompt Injection and Data Extraction

The most direct and immediate threat is Prompt Injection, where attackers manipulate the LLM’s instructions to bypass safety guardrails or extract confidential data.

-

Indirect Prompt Injection: The attacker inserts a malicious command into an external data source (like a document, website, or email) that the LLM is instructed to summarize or process. When the LLM accesses this source, the hidden prompt is executed, potentially causing the model to leak sensitive information from its current session or database connection.

-

Jailbreaking: Attackers use sophisticated linguistic techniques to coerce the model into ignoring its pre-set system prompts (e.g., instructing it not to generate malicious code or phishing emails), effectively turning a benign AI into a weaponized content generator.

The Automation of Cyber Threats

LLMs dramatically lower the barrier to entry for highly sophisticated, convincing cyberattacks:

-

Massive Phishing Scalability: LLMs can generate millions of unique, grammatically perfect, and highly personalized phishing emails (Spear-Phishing-as-a-Service) that are virtually undetectable by traditional filtering mechanisms which rely on identifying common linguistic patterns.

-

Code Vulnerability Generation: A novice attacker can leverage an LLM to quickly generate functional exploit code for known vulnerabilities, or even generate functional, complex malware, dramatically increasing the volume and speed of zero-day exploitation attempts 1.3, 2.5.

2. Data Leakage and Integrity Risks in the LLM Lifecycle

The full lifecycle of an LLM—from data ingestion to deployment—is fraught with inherent data security challenges, particularly concerning the integrity and confidentiality of proprietary enterprise data.

The Training Data Poisoning Attack

Attackers target the LLM’s most fundamental component: its training data. Data Poisoning involves inserting intentionally erroneous, biased, or malicious data into the training set.

-

Backdoor Insertion: This can lead to a “backdoor” being inserted into the final model, where a specific, benign input (e.g., a certain name or phrase) causes the model to generate a malicious or nonsensical output, compromising its reliability during production 3.2.

-

Bias and Ethical Risks: Poor data governance can lead to the LLM inheriting and amplifying harmful biases, creating ethical and legal liabilities for the deploying enterprise. Understanding and mitigating these fundamental model risks is crucial for security. For a deep dive into model governance and mitigating fundamental risk, review our analysis: AI Ethics & Bias in Large Language Models (LLMs): A Developer’s Guide to Fair Algorithms

Inadvertent Data Leakage (PII and IP Exposure)

The models themselves present a persistent risk of Inadvertent Leakage of Personally Identifiable Information (PII) or Intellectual Property (IP).

-

Memorization: LLMs can “memorize” specific, sensitive examples from their training data. An attacker, through targeted prompting, can potentially elicit the exact wording of a confidential document or the PII of an individual if it was included in the training set 4.1.

-

Prompt Logging: Enterprises often log user prompts for auditing and model refinement. Without stringent obfuscation and encryption, this log becomes a centralized, highly lucrative target for attackers seeking proprietary information or trade secrets.

![]()

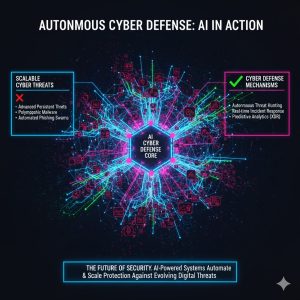

3. AI-Powered Defense Mechanisms: Building the Secure Perimeter

The solution to AI-driven threats must be AI-driven defenses. The new frontier demands the implementation of sophisticated, autonomous, and adaptive Defense Mechanisms that operate at machine speed.

Adaptive and Autonomous Threat Detection

Traditional signature-based antivirus or firewall rules are too slow to counter the rapid mutation of AI-generated threats.

-

AI-Driven SIEM and XDR: Security Information and Event Management (SIEM) and Extended Detection and Response (XDR) systems are now powered by AI/ML models that analyze petabytes of telemetry data in real-time. These systems can identify subtle, anomalous patterns indicative of a sophisticated, LLM-generated attack (e.g., coordinated, high-volume access attempts from distributed sources) with far greater accuracy and speed than human analysts 5.3.

-

Zero Trust Architectures (ZTA): ZTA, reinforced by AI, ensures that no user, device, or application is implicitly trusted, regardless of its location. AI continuously monitors trust scores based on behavioral analytics, providing a dynamic defense against compromised LLM-interacting applications.

Securing the Production Pipeline: MLOps and Model Monitoring

The deployment environment for AI must be as secure as the models themselves. A new discipline has emerged to ensure this stability and security.

-

Secure MLOps (SecMLOps): This practice integrates security testing and governance directly into the Machine Learning Operations pipeline, treating the model as a living, executable application that requires constant scrutiny. This includes automated checks for data drift, concept drift, and adversarial attacks immediately upon deployment 6.2. For strategies on maintaining the stability and security of AI in production, see our specialized report: MLOps and Deployment: Ensuring Stable AI in Production Environments and Avoiding Drift

4. Regulatory and Strategic Response: Global Governance of LLMs

The speed of AI deployment is rapidly outstripping the pace of global legal and ethical governance, forcing corporations to adopt a proactive, strategic approach to compliance.

Global Regulation Landscape

Governments worldwide are grappling with how to regulate powerful LLMs, creating a complex and potentially fractured compliance landscape for multinational enterprises.

-

EU AI Act and US Executive Orders: The EU AI Act introduces strict classification and compliance requirements for “high-risk” AI systems, including stringent data quality and documentation mandates. Meanwhile, US executive orders place significant emphasis on national security, watermarking, and the responsible development of foundation models 7.1.

-

Corporate Liability: Enterprises are increasingly liable for the output of their deployed LLMs, meaning they must prove their models were trained on compliant data, are free from harmful bias, and have adequate security protocols to prevent misuse 8.4. To understand the legal and market impact of these new laws, we recommend reviewing our analysis on: The Regulation of Generative AI (US/EU): New Laws and Their Impact on Content Creation Platforms

Strategic Investment in Future Defense

The strategic imperative for any major corporation is to shift security spending from reactive defense to proactive, AI-native security architecture. This includes:

-

Homomorphic Encryption (HE): Investing in technologies like HE, which allow data to be processed and analyzed while remaining encrypted, offering a mathematical guarantee against data leakage from LLMs.

-

Federated Learning: Utilizing techniques that allow models to be trained across multiple decentralized datasets (e.g., across different corporate divisions) without ever centralizing the raw data, mitigating the risk of a single point of failure and mass data breach.

Final Verdict: The Need for an AI-Native Security Posture

The AI and Data Security landscape has been permanently redefined by LLMs. They are not merely sophisticated tools; they are complex, semi-autonomous entities that demand an entirely new security posture. The age of the static, signature-based defense is over. Only enterprises that embrace AI-native security mechanisms—integrating advanced detection, secure MLOps, and global regulatory compliance directly into their AI strategy—will be able to harness the power of LLMs while mitigating the existential cyber risks they present. Investing in this AI-native defense is no longer a choice; it is the mandatory future-proofing strategy for the modern digital enterprise.

REALUSESCORE.COM Analysis Scores

| Evaluation Metric | Score (Out of 10.0) | Note/Rationale |

| LLM-as-a-Threat Vector Severity | 9.8 | High due to prompt injection, jailbreaking, and automated phishing scalability. |

| Data Leakage Risk in Training | 9.5 | Significant risk of PII/IP exposure via memorization and data poisoning attacks. |

| Efficacy of AI-Powered Defense | 9.0 | Highly effective (e.g., AI-driven XDR) but requires significant investment and continuous model retraining. |

| Regulatory and Compliance Burden | 9.6 | Global regulatory fragmentation (EU AI Act, US Orders) imposes severe, non-negotiable compliance costs. |

| Strategic Future-Proofing Urgency | 9.9 | Adoption of SecMLOps and Homomorphic Encryption is critical for long-term viability. |

| REALUSESCORE.COM FINAL SCORE | 9.6 / 10 | LLMs create a crisis-level security challenge that only an adaptive, AI-native defense strategy can meet. |